MEV MEV everywhere, where to go?

A Case Study of DAG-Based Consensus and MEV Exposure in Mysticeti, Raptr, Autobahn, and Vanilla Narwhal/Bullshark

Maximal Extractable Value (MEV) represents the value that can be (and is!) extracted from blockchain networks by those who have the ability to influence how and when transactions are processed.

We gather together some transactions, then put them in a waiting pool and then process them one by one - simple mechanism which is used in Bitcoin and Ethereum

But why, no MEV on bitcoin but on ETH because on Bitcoin Ledger there not a single Liquidity Pool

Ethereum does the state change in a straight manner where each block decides/changes the state of the global state machine with the transaction finality spanned over multiple blocks for possible reorgs with slashing, Whereas Solana also does the same thing but it gives more throughput with the help of PoH where each block gets kind of pre-confirmed and the posted blocks can be then attested over a long span while continuously spitting out the blocks (need to underline all the steps in a transaction for correctly pointing out the gains from each technical decision taken)

Whereas DAG based chains(SUI, Aptos, Sei) try to give instant finality and multiple concurrent validators to get more throughput(more gas needed for a big block), trying to overcome 2 bottlenecks in 1 shot

Disclaimer - for proper visualization of consensus mechanism, click on the link embedded in the title of each consensus

Narwhal and Bullshark

Transaction Lifecycle

The transaction are sent by front-end clients to workers of a single or multiple validator which then propagates these single transaction or transaction batches among each other to have a similar(to whatever extent possible) dataset of transactions

Then after making the blocks, each validator sends their individual block(each of them have a indiavidual mempool) 2f+1 signatures from 2f+1 validators(total are 3f) to make a certificate for the current block which would be included in it’s pseudo-local DAG chain and

The voting is done on hash of the bundle/batch of the transaction instead of the whole block and there can be multiple reason for validators not having same mempool of transaction for a round i.e. latency based or intentional

This leads to casual history which has left out transaction not committed by the leader block

the validators whose current block which gets f+1 such certificates(2f+1 votes) from the last f+1 blocks first(like a sliding window) will get to be the anchor block

The f+1 references which resembles a sliding window focuses too much of validator latency among its peers(validators), which makes geographical concentration of validators the ultimate centralization point for these chains, as there is no random or stake based validator selection.

What happens to the validator which may have been offline or have high latency due to geographical positioning etc. They eventually catch up with the chain, the chain doesn’t stops for them

Ordering of Transactions

Ordering happens at two levels:

Inter-block ordering (within a single validator’s batch):

Proposers can choose any order for non-conflicting txs, while conflicting txs (e.g. same liquidity pool) must follow whatever price-priority rule they adopt (gas-fee ordering, bundle preference, etc.).Intra-block ordering (across validators’ batches):

The anchor merges all certified batches into a “meta-block.” Some chains let the anchor put its own batch at the top (Sui’s old approach), others at the bottom (Aptos), or in any arbitrary position—each choice opens different MEV opportunities.

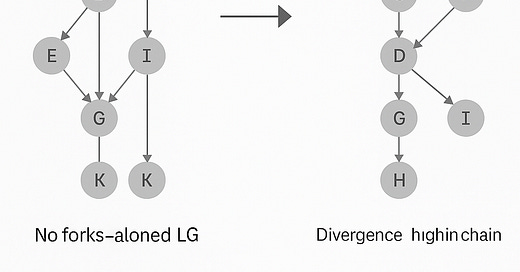

Casual history and State Divergence

Another thing to look here is casual history where anchor can unbundle all of it’s casual history and make a block out of it, in SUI the gas fee is the organizer here OR anchor can’t unbundle it’s casual history blocks and has to include them in a block by block manner

More MEV Implications

So in vanilla narwhal/Bullshark, the validator which has the lowest latency has the most chance of being selected(it is a problem of Single-Source Shortest Paths (SSSP) solved using Dijkstra’s Algorithm), so it is in interest of such less latency validators, to delay share their transactions with other validator’s workers, as voting is done only on hash that doesn’t reveal transactions, but this would also lead to other validators also delaying their transmissioning their transaction to the such frequently chosen anchor-nodes leading to an all-out transaction propagation war, leading to more and more failures in user transaction and centralization of validator set.

This leads to more fragment casual history among validators.

This can be solved either through removing the f+1 certificate condition for choosing the anchor and using some randomized or stake-based approach for choosing leader instantly for the current round or for few rounds OR if keeping the anchor mechanism same, then implementing a way to let all the validators having transaction batches of their own in the final block or just making multiple leader blocks every blocks.

Mysticeti

Mysticeti approaches a more Optimistic approach to consensus then Bullshark.

Transaction Lifecycle

The transaction from clients are send to a quorum store which is much like a single public mempool for all the validators instead of local mempools for each validator(vanilla narwhal), after getting in the pool, all the validators vote on the transaction and if f+1 validators vote on it, the transaction are ready to be put into a block by the leader validators

Originally: Single validator’s worker coordinated validator voting for single-owner transactions.

Now: The client sends the transaction in parallel to all validators and aggregates their signatures itself.

Multiple validators are chosen each round according to pseudo-random algorithm with priority-based ordering instead of sliding window f+1 validators certificates, these eliminates the latency based centralization of validators

Mysticeti uses a 3-round confirmation cycle:

Round R+1: Blocks reference leaders’ Round R proposals.

Round R+2: Validators form Quorum Certificates (QC) by referencing the Round R leader block in 2f+1 future blocks

Round R+3 (if needed): If some blocks still lack sufficient QCs, one QC referencing the original Round R leader in R+3 suffices, and retry loops repeat.

And the blocks in rounds after R+3 whether they are confirmed are on backstop till the initial leader blocks gets confirmed(this leads to huge latency even in small message drops as the whole chain halts)

This way of consensus is made possible through all of the validators having the more or less exact state of current transaction data among them due to single public mempool(Quorum store) due to which they don’t have to propagate transaction among themselves and vote on batches of transactions

On the confirmation of blocks - It moves away from the optimistic addition approach, where a block would theoretically never be skipped. In that model, the only parameter was latency—how late that block would be committed.

But now, it changes to a system with three conditions:

Committed blocks — These are blocks that:

Receive 2f + 1 references in the next round (round r), and

Are themselves a block that references 2f + 1 blocks in round r.

Undecided blocks — These are not yet committed, but can still be decided within a maximum window of three blocks ahead.

Skipped blocks — If a block is not decided within this three-block window, it is considered skipped.

However, this “skipped” condition is somewhat ambiguous, as there are multiple waiting periods (where the current leader waits for the previous leader to propagate its block through the certificate pattern) before a block is officially considered skipped.

Ordering of blocks

Mysticeti’s slot has no merged meta-block; instead, each leader’s block finalizes one after another in a single lane. Thus all MEV happens “inter-block” within the block you propose:

Inter-block ordering splits into two rules:

Non-conflicting txns can be reordered arbitrarily.

Conflicting txns (e.g., same liquidity pool) must follow gas-price priority, though high-fee bundles still get favored.

And also SIP-19 soft bundles commit with high deterministic probability, acting like PBS bundles.

So looking from Transaction ordering rules perspective only, Mysticeti confines all MEV extraction to each validator’s own block ordering. Leaders can still front-run, back-run, arbitrage, and sandwich, but only within the scope of a single block that you control if the leader takes in account the gas-fee ordering .

Any cross-proposer or cross-block strategy becomes a one-block affair as a validator can’t be selected leader for 2 continuous blocks due to multiple leaders per round, reducing both the granularity and predictability of MEV opportunities.

Casual history

It is greatly reduced in Mysticeti, as it originates from differing local state of transaction data among validators but the public mempool(Quorum store) makes transaction data equally accessible for all but still leaves some ways to have casual history, thorough:-

Network Latency & Packet Loss - Even if clients broadcast to all validators, some messages arrive faster (or at all) to some nodes, so validators sign availability at slightly different times. A validator that hasn’t yet seen enough signatures will delay a tx entering its local mempool.

Honest Filtering or Censorship - A validator might drop or deprioritize certain transactions (e.g. spam or known-bad behavior) before signing availability. That choice isn’t globally visible in the quorum store.

Validation Failures - If a tx fails local checks (insufficient balance, malformed signature), one validator will reject it while others accept it, creating a permanent local-state difference.

More MEV implications

Searchers rely on spamming transactions as a strategy to deal with the unpredictability of transaction ordering due to all of the above non-deterministic factors, meaning that searchers cannot be sure if their transaction will be included first

Quorum signature before including a transaction for verification of the transaction is like 3 message process it allows the validators to know the transaction before the transaction is there ensuring fair game for all the validators but the end game is the priority of leader validator in the current round among pack of all other leader validators

Raptr(Aptos)

It merges both Mysticeti’s optimistic and original bullshark’s pessimistic way. It combines an optimistic DAG layer—where validators freely gossip and certify transaction batches without awaiting votes on each one—with a pessimistic BFT consensus step each round, in which a single leader proposes a compact “cut” of that DAG and everyone runs a prepare-and-commit vote on it.

This hybrid lets data flood the network quickly (optimistic availability) while still guaranteeing safety and finality through a small, rigorous consensus vote on the DAG snapshot (pessimistic finality).

Transaction Lifecycle

The process of getting transaction to the validators is similar to original bullshark/narwhale where transactions are sent to the workers of the validators instead of the quorum store in the mysticeti then the main difference comes in how consensus is formed

All the validators make their own blocks similar to bullshark but the difference is each block is divided into multiple transaction batches which are ordered with help of Minimum Batch Age and a single validator is chosen through a pseudo-random algorithm.

Minimum Batch Age: Validators only certify and gossip batches once they’ve “aged” through a fixed minimum epoch in the DAG, so everyone has had a chance to see and validate each new batch before it’s eligible for inclusion.Then the validators forward these transaction batches to other validator and 2 round of voting is held first for Quorum certificate requiring f+1 validators to have same set of transaction prefix this ensures data availability and then among each 2f+1 validators the most prevalent prefix order of batches is confirmed to be the block

And no more further voting is required other than a single round of votes and the block is committed.

This mitigates the problem of the optimistic DAG consensus like Mysticeti where if message drops even a smaller percentage, which leads to high latency issue because of no guaranteed round-advancement as if for some reason the leader goes offline. In raptr a Timeout Certificate(TC) coming from 2f+1 votes from honest validators leads the global state in next round even if the leader is not able to communicate and due to prefix methods, even if there is high amount of disagreement in transaction batch(prefix) ordering, the chain will propose the block with the lowest number of batches having quoram

Ordering

Ordering can be seen inter batch and intra batch level i.e in a single batch of transaction, the order if determinant with priority gas fee is being the biggest deterministic factor but within the block, sandwich and back-running is much harder because an attacker doesn’t know exactly where in the block their victim’s txn will land once the shuffle runs and shuffle refers to a deterministic ordering of transaction where transactions from the same sender are set apart from each other as much as possible to reduce the number of conflicts and re-execution during parallel execution and reducing MEV on the side but this is also deterministic, an attacker can still extract MEV by exploiting the very predictability of the shuffle. For example, they can flood the mempool with high–gas-price “spacer” transactions whose sender addresses and nonce gaps are chosen to land immediately before or after a victim’s

Casual History or State Divergence

The methods of having voting on a single global state of transaction each round leads to eradicate casual history as seen in vanilla bullshark as there is no edge for the leader validator and now the casual history for all the validators is more or less the same and no leader validator can make a block of distinct casual history pretty different from other blocks.

All the ways to have casual history like:-

Late or Dropped DAG Messages: Packet loss or congestion can delay or drop some batches on certain nodes, creating temporary gaps in their local view until the agreed cut is applied.

Local Filtering Policies: Validators’ private admission rules (rate limits, spam filters, censorship) can cause different subsets of batches to enter the DAG, leading to subtle divergence despite a shared prefix.

Validation Failures: A node rejecting malformed batches (bad signatures, insufficient funds, nonce errors) while others accept them causes momentary mismatches in what each validator considers available.

are eliminated because if the validators can’t come to a prefix alignment then, the blocks would be empty and no one will profit except SUI maybe(not a joke:0), this would require most of the validators to be malicious

More MEV Implication

Reduced Sandwich Surface (No Cross-Block Merges): Because each round finalizes a single agreed‐upon DAG cut rather than merging multiple competing blocks, attackers cannot spread a sandwich attack across different proposers’ blocks in the same slot. They must sandwich entirely within the leader’s batch sequence—significantly shrinking the attack window.

Less Frontrunning (Shared Prefix) but Still Possible Within the Cut: With everyone executing the same ordered list of batch digests, frontrunners lose the edge of private batch knowledge. However, once the leader orders that cut, it can still inspect the finalized batch list and slip its own high-priority txns just ahead of victims, as long as they reside in the same cut.

Bundle Tactics and Fill-Level Attacks: Leaders choose which bundles to include up to the block gas limit. By flooding the DAG with a mix of high-fee bundles and filler transactions, an attacker can force out competing bundles or shape the final inclusion set—extracting value by controlling the block’s effective capacity.

Raptr overcomes most of the bullshark and narwhal’s MEV extraction by making casual history a thing of past(pun), and having a deterministic ordering within each block with pseudo-random shuffling.

SEI

Sei takes a new approach for making blocks mixing all of the mechanisms we have seen above

Transaction Lifecycle

The transactions are sent by clients directly to the validators and there no quorum store(single public mempool) and any kind of propagation of transactions among the validators - this leads to transaction fragmentation on the validator level

But the silver lining here is all of these blocks will be added in the same slot in a single block and are publicly visible

Then each validator makes their batches in parallel and then sends the batch to other validators till f+1 validators vote on it to give it a PoA(proof of availability)

Then a leader validator is selected based on stake weight, who then bundles all the 3f validator’s batches together to form a block and then propagates it back to all the leaders for 2F+1 vote quorum, after that the block receives a Quorum Certificate(QC) confirming the block.

Sei takes on a fully pessimistic approach compared to Mysticeti and Raptr where voting is done both for data and consensus but it is much less deterministic in exactly which transactions end up in the final block and how they’re ordered. Because each validator builds its own batch from a fragmented mempool and the stake-weighted leader is free to merge those batches according to configurable rules (gas-priority, batch-auctions, randomized shuffles, etc.), the precise block content and ordering can vary based on network timing, stake distribution, and protocol parameters—giving Sei both high throughput and a tunable MEV surface.

Casual History

In the round of gathering all the validator batches by the leader, if there are some missing batch due to latency or offline issues, they are skipped and consensus is taken out of n instead of 3f and if they ever come online and have some batch which was not taken by the leader block, they can merge it later, this differs from the chain stagnation seen in Mysticeti.

This way the casual history of each validator is satisfied/ended in each round but on the behalf of losing some transactions due to no propagation of transaction between the validators before consensus.

MEV Implications

The sending of all the transaction data for PoA(f+1 votes) gives a new MEV vector which is public DA MEV which means that when validators send each other their full-block of transactions for commits/votes, one validator can easily steal other validator’s transaction and combine with their own to steal MEV.

This also leads to dynamic transaction ordering within each batch by different validators, which can make it very easy to get MEV transactions in without batting an eye but also can lead to various types of random orderings possible by each validator.

This leads to Application-specific sequencing which is boon as more inclusivity of different transaction ideas but also a bane because of explicit malicious activities but it can be controlled by introducing slashing and public DA MEV Vector comes as a advantage here as multiple validator then can try to profit heavily of that malicious transactions

1. Validator-to-Validator MEV Surfacing (DA Layer MEV)

Because each validator shares full transaction batches with peers (during PoA), any validator can read unconfirmed txs before consensus and:

Copy & front-run arbitrage or liquidation txs.

Back-run trades or swaps in protocols that haven’t obscured logic.

Repackage other validators’ txs with their own for better placement in final block (classic bundle jacking).

This creates a free-for-all MEV auction before consensus is finalized.

2. Validator ↔ App Coordination: Collusion Risks & Vectors

Now, if an application is malicious—or even just subtly self-interested—it can embed validator-aware logic into how it emits or prioritizes transactions:

DEXs or oracles can send key txs only to a known validator, ensuring they front-run their own liquidity events.

The app intentionally delays propagation so that only colluding validators get a batch in time for PoA voting.

Now, validators have incentive to form relationships with applications for exclusive MEV, leading to validator favoritism, censorship, and opaque order flows.